Live Performance

in the age of super computing [2007]

This fragmentary text, written in Summer 2007, was an attempt to sum up thoughts about performing electronic music live. Almost twenty years later, this needs background: In 2007, performing with just a laptop was the big thing, it felt liberating and empowering to be able to travel all around the world with hand-luggage and perform. This was before the rennaisance of hardware on stage and the explosion of modular synthesis. I do not agree anymore with everything I wrote, but I still find it worth sharing. (December 2025)

Lots of texts have been written in the recent decades about live electronics, especially since so many of us are occupying the stages of clubs and festivals with our laptops. As a description for this kind of concert the term 'laptop performance' was invented, in an attempt to find a catchy phrase for something that is hard to categorise.

I do not like that term so much. To me the laptop is just another musical tool and the only reason why I am using it on stage is the simple fact that it is a portable supercomputer, capable of replacing huge racks of hardware.

The laptop itself does not contribute anything by its own, we do not write a 'Symphony for Dell', perform a 'Suite for six Vaios' or 'Two Crashes for Power PC', unless we want to be very ironic.

What makes it an instrument is the software running on it. And this is where things start to get complicated. The audience looks at a laptop whilst listening to music. But what exactly creates the music and how the performer interacts with it is completely non-transparent. The laptop is not the instrument, the instrument is invisible.

And to obscure things even more that laptop is not one single instrument and it is not 'played' by the performer. What really happens is more described as a huge number of instruments played by an invisible band sitting inside the laptop. The only part visible is the performer conducting the work in a way which looks extremely boring in comparison to the amount of physical work carried out by a person guiding an orchestra through a symphony. The minimum difference between pianissimo and a wall of noise? Might be one pixel, 0.03mm on screen.

The audience does not even get much useful information from knowing what software is running, since in many cases the software used for performing is not the same as the one used for creating the music. Even if it is, the act of performing is very likely quite different from the process of composing.

Chapter I - The invisible instrument

Every time someone asks me what I do for living and I answer that I make music, the inevitable follow-up question is about what instrument I play. I always get red and mumble "computer", anticipating to spend the next half hour with explanations. (Due to my occupation with Ableton I nowadays simply say that I am a software guy. Usually no further questions.)

How do you play a computer? Rhythmically banging on the screen? With a bow? With a hammer? (sometimes!) The strange look one gets when admitting to play a computer indicates that this instrument does not fit into the typical categories.

A classical non-electronic musical instrument relies on a constant user-interaction in order to produce a sound. The instrument has specific physical properties, defining its sound and the way it wants to be played. The music is a result of theses properties and the skills of the player. The listener has a sense of what goes on, even if they do not play any instrument themselves. It is obvious that a very small instrument sounds different from a very big one, that an orchestra sounds most massive and most complex if everyone there moves a lot, and that hitting a surface with a mallet creates some sort of percussion, with its sound depending on the shape and material.

A whole world of silent movie jokes is based around this universal experience and knowledge. If hitting a head with a pan sounds like a cow bell the comical element is the mismatch between expectation and result. Now explain to someone why pressing a space bar on a computer creates a sounds like a jazz band falling down a stairway one time and the next time it makes no sound at all...

With 'real' instruments it is also obvious that precision, speed, volume, dynamics, richness and variation in sound are the result of hard work, and that becoming a master needs training, education and talent.

There are exceptions to this rule, and it is no surprise that these instruments have some similarity to electronic instruments. Consider for example a church organ, which allows the performer to put some weights on the keys, and enjoy the resulting cluster of sound without further action, or musical toys capable of playing back compositions represented as an arrangement of spikes on a rotating metal cylinder. The church organ already is a remarkable step away from the intuitively understandable instrument. The organ player is sitting somewhere, the sound comes from pipes mounted somewhere else. Replace the mechanical air stops by electromagnetic valves, and the player by a roll of paper with punched holes, and the music can be performed with more precision than any human could achieve.

The image above shows a Ampico-Player Piano from Marshall & Wendell, made in the 1920s. (photo by Marc Widuch / www.faszinationpianola.de)

The player piano allowed composer Conlon Nancarrow to realise compositions unplayable by humans. The invention of electricity made complex "self playing" instruments possible, and due to further technological progress in electronics and computer science, those machines became small enough to be affordable and sonically rich enough to make them interesting for composers.

If you replace a musician by a sound generating device directly controlled by a score, you get rid of the unpredictable behaviour of a human being and you gain more precise control over the result. A great range of historical computer music and certainly a huge part of the current electronic (dance) music has been produced without the involvement of a musician playing any instrument in realtime. Instead, the composer acts as a controller, a conductor and a system operator, defining which element needs to be placed where on a timeline. This process is of entirely different nature than actually performing music, it is much closer to architecture, painting, sculpting, or engineering.

In electronic music this non-realtime process allows for almost infinite complexity and detail: each part of the composition can be modified again and again. We live in a world of musical undo and versioning. A computer is the perfect tool for these kinds of operations. The workflow in software today is much more efficient than the complex studio setups of the pre-laptop times, which were dominated by a giant mixing desk, lots of hardware units and physical instruments. The result of working for a while on a piece of music with current music software might be a piece of audio which would need hundreds of musicians if performed in one go by human beings. How can this be put on stage?

Chapter II - Tape concerts

At the very beginning of computer music, the only way to perform a concert was to play back a tape. Computer-generated music was created in a non-realtime process; the creation of the sound took much longer for the computer than the duration of the sound itself. A situation which, for the design or modification of more complex sounds, still did apply till the end of the 20th century. (In the mid 1990s one had to calculate a simple crossfade in ProTools as a non-real time process!)

As a result, the so-called 'tape concert' was born, and the audience had to accept that a concert means someone is pressing a play button at the beginning and a stop button at the end. Ironically, half a century later, this is what all of us have been experiencing numerous times when someone performs with a laptop. Trying to re-create a complex electronic composition live on stage from scratch is most of the time an impossible and pointless task.

The bottleneck is not that today's computers cannot produce all those layers of sound in realtime, but that one single performer is not able to control the process in a meaningful and expressive way. One cannot perform a symphony alone.

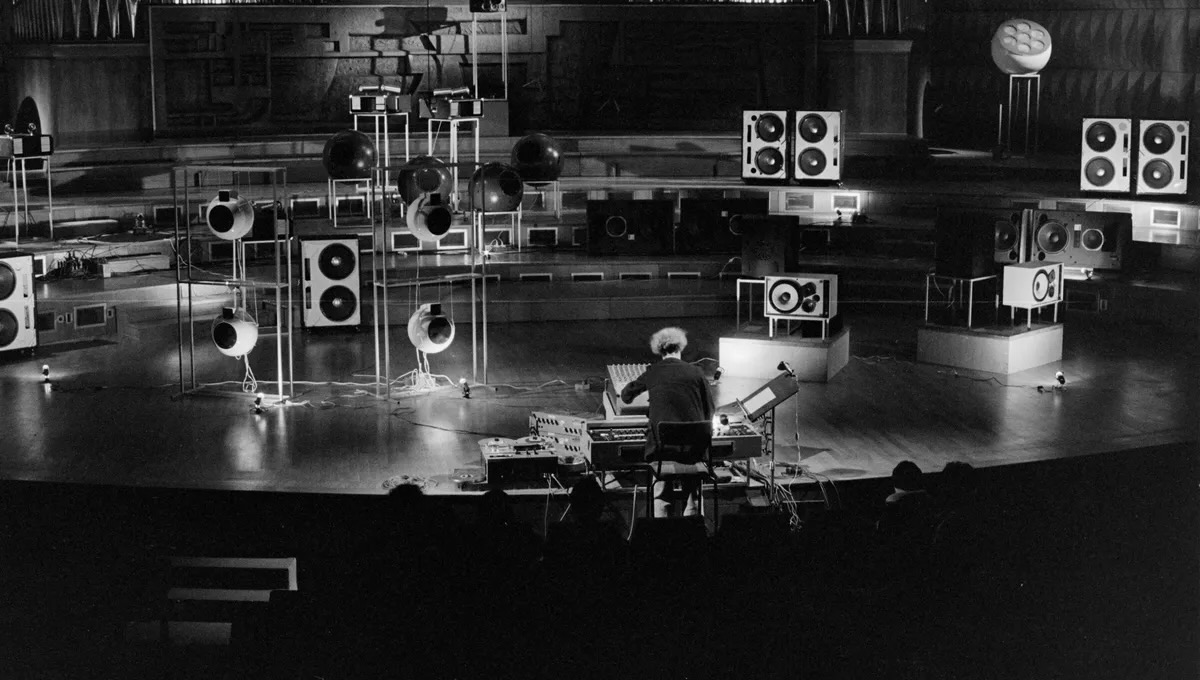

French computer music pioneer François Bayle playing with the INA GRM Acousmonium 1980. Photo by Laszlo Ruszka.

Even back in the 1930s there were already real time electronic instruments, such as the Theremin or Oskar Sala's Mixtur Trautonium. Built for a single player, and by nature in expression and approach similar to acoustic instruments. Their complexity was nevertheless limited and they were never meant to replace a full orchestra. For our purpose of finding ways out of the laptop performance dilemma the tape concert situation is of much more interest, since it is closer to what we do with our laptops today.

Even while these concerts were referred to as tape concerts, there was the notion of the speaker as the instrument. What the audience could see on stage was loudspeakers, and then there was the operator with a mixing desk and the tape machine. The speakers replaced the musicians on stage, whilst the operator was sitting in the middle of the audience or at the back of the room. Visually it was clear that this person was not a musician, but an operator. There was a very practical reason for this. Similar to the role of the conductor, the operator was controlling the sound of the performance, and this could only be done by a placement close enough to the audience. This became even more important once composers started to use multiple speakers.

Multiple speaker tape concerts soon became situations with room for expression by the operator. A whole performance school is based around the concept of the distribution and spatialisation of stereo recordings to multiple, often different sounding speakers, placed all around the listener. The operator, similar to a good DJ, transports the composition from the media to the room by manipulating it. The DJ mixes sources together to stereo, the master of ceremony of a tape concert distributes a stereo signal to multiple speakers dynamically to achieve the most impact. This can be a quite amazing experience, but it certainly needs the operator to be in the eye of the storm, right at the center of the audience.

DJs, tapes and black boxes on stage

The DJ concept has a connection to the tape operator concept: Both are taking pre-recorded pieces and re-contextualise them. One by diffusion in space, the other by combining music with other music. When listening to a choir on a record, we do not assume that tiny little singers are having a good time inside a black disk of vinyl.

We judge the DJ by other criteria than the intonation of the micro-choir. A good DJ set offers all we normally expect from a good performance. We can understand the process, we can judge the skills, and we can correlate the musical output to input from the DJ; we have learned to evaluate the skill of working with two turntables and a mixer.

The same is true for the distribution of pre-recorded music to multiple speakers. There is a chance we can understand the concept and this helps to evaluate the quality of the performance more independently from the quality of the piece performed.

Often a classic tape concert comes with an oral introduction or written statement, helping the audience to gain insight into the creation of the presented work. I think this could also work as model for today's presentation of various kinds of electronic music. However, while in the academic music world tape concerts are well accepted and understood, there seems to be a need for electronic music outside that academic context to be "performed live" and "on stage", regardless of whether this is really possible or not.

When listening to a laptop performance, the audience might compare a completely improvised set (which is indeed also possible now with a laptop if we accept reduced complexity of interaction) with a completely pre-recorded set.

The audience cannot really judge the quality of the performance, only the quality of the underlying musical or visual work, they might compare a full playback with a risky improvisation - without being able to distinguish the two. Also the performers might want to be more flexible, might want to interact more.

The classical tape concert is an option which works well for scenarios where pre-recorded pieces are presented - and made clear as such to the audience - and where there is room for the operator in the center or at least close to the audience and in front of the speakers. For those reasons it does not really work in a normal dance club context, or as a substitute for a typical rock' n' roll style "live" concert. If the tape concert is not an option, the key questions are: how can we really perform and interact on stage?

Chapter III - The golden age of hardware

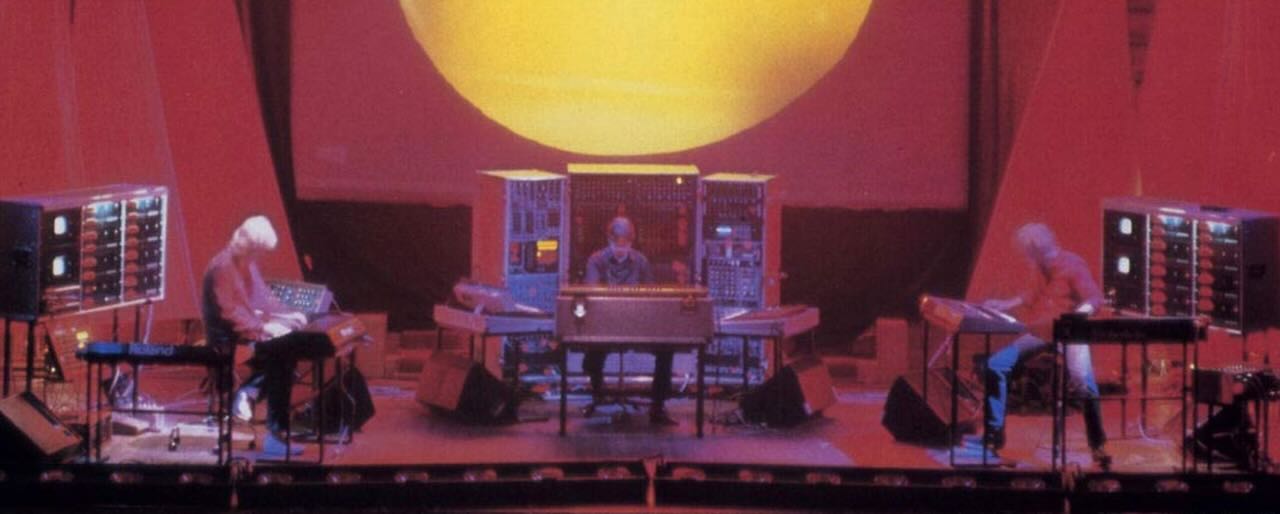

Tangerine Dream: Logos, live in London 1982, album cover excerpt. The big racks on the left and right contain custom computer controlled step-sequencers.

While the creation of academic computer music was from the very beginning more a non-realtime process than an actual performance, the late 1960s saw the development of the first commercial synthesizers, equipped with a piano keyboard, and ready to be played by musicians rather than operated by engineers. Suddenly, electronic sound became accessible to 'real' musicians - this led not only to impressive stacks of Moog, Oberheim, Korg, Arp, Yamaha and Roland keyboards on stage, but also to a public awareness of electronically created sounds. The peak of this development were the stage shows of electronic post-rock bands like Tangerine Dream and Kraftwerk in the late 1970s and early 1980s.

What the audience saw was musicians operating impressive amounts of technology, blinking lights, large mixing consoles, and shimmering green computer terminals - a showcase of the future - on stage.

It was impossible to understand how all these previously unheard-of new sounds emerged from the machines, but due to the simple fact that there masses of them and total recall was not possible, the stage became a place where a lot of action was needed throughout the course of the performance.

Cables had to be connected and disconnected from modular synthesizers, faders had to be moved, large floppy disks were carefully inserted into equipment most people had never seen before, not to mention owning one of these precious units, and more complex musical lines still had to be played by hand. It was a happening, an event, remarkable and full of technological magic; the concert was a unique chance to experience the creation of electronic music. And in general it really was 'live'. What was set up on stage was nothing less than a complete electronic studio, operated by experts in real time. Great theatre, great pathos, and sometimes even great musical results.

The giant keyboard stacks and effect racks not only looked good on stage, they were the same instruments as the ones used during the creation of the recordings preceding the live concerts. Putting them on stage was the most straightforward way to perform electronic music. This was the golden age of electronic super groups and the times of incredibly expensive hardware. Starting from the late 1980s inexpensive computer technology changed things dramatically. With the advent of home computer-based MIDI sequencing, creating electronic music became much more affordable. This not only had an influence on the process of creation but also on performance.

Chapter IV - fame and miniaturisation

If producing electronic music is possible in a bedroom, the content of this bedroom (minus the bed most of the time) can also brought on stage. Or down the basement of an abandoned warehouse, without stage, right in front of the audience. The revolutionary counterpoint to the giant shows of the previous decades was the low-profile, non-stage appearance of the techno producer in the early 1990s. The equipment became smaller and the distance between performer and audience became smaller too. The music was often rough, and its structure was simple enough to be decodable as direct result of actions taken by the performers. Flashing lights on mixer, all fingers on mutes, eye contact with the partner, and here comes the break! Ecstatic moments, created using inexpensive and simple to operate equipment, very close to the audience. Obscure enough to be fascinating, but at the same time an open book to read for those interested, and in every case very direct and - live!!

These precious moments come to an end driven by the same forces which were enabling them: Computers became cheaper and more powerful. More functions could be calculated in real time inside a small box.

This development changed electronic live performance in significant ways. The more complex the musical output created with a laptop became, the less possible to re-create it live. A straight techno track made with a Roland TR-808 and some effects and synths can be performed as an endlessly varying work for hours. A mid 1990s drum & bass track, with all its time-stretches, sampling tricks and well-composed breaks is much harder to produce live, and marks pretty much the end of real live performance in most cases. As a result, most live performances became more tape concert-like again, with whole pieces played back triggered by a mouse click and the performer watching the computer doing the work.

This would all be fine, if performance practise were reflecting this, but obviously things did not work out that way. Instead we experienced performers who were more or less pretending to do something essential, or carrying out little manipulations of mostly predefined music. The performers became slaves of their machines, disconnected from their own work as well as from the audience. This also has to do with the second big motor of change: fame.

Fame puts the performer on stage, away from the audience. Miniaturisation puts the orchestra inside the laptop. Fame plus miniaturisation works very effectively as a performance killer.

That intimacy of a small club and the audience very close to the performer provided a highly communicative situation, where interaction with the audience was possible, if not even desired.

But once the artist reaches a certain level of fame, this does not work anymore; The audience gets bigger, and the performer moves on to the big stage. The audience expects from a concert the same full-on listening experience as from records. And this is impossible to deliver in real time. But the artist has not much of a choice. They play more or less pre-recorded music. From a laptop. Far away from the audience. Elevated but lonely.

This situation not only leads the audience to conclude the person on stage might be checking emails or flight dates, but it is also unsatisfying for the performer. Performing electronic music on a stage without acoustic feedback from the room, completely relying on some monitors, is quite a challenge and often enough far from being fun.

Add a sound engineer to that scenario who has no idea at all about your aesthetics, and no band colleagues who could provide some means of social interaction. Instead, there is just you and your laptop. The best recipe to survive this is to play very loud, with very low complexity and hope for an audience in a chemically enhanced mode.

Unfortunately most typical concert situations outside the academic computer music community do not support the idea of playing right in the middle of the audience. In a club, it is often impossible since there is the dance-floor and you do not want to be there with a laptop on a small table at four in the morning. Even if you do find a situation appropriate for a performance in the middle of the room, maybe at a festival, and after successfully arguing with the stage manager for several hours you might be confronted with the dynamics of audience expectations:

They want you elevated, they want you on stage, they want to look up to you, they want the 'big show'.

There is an interesting difference between the computer music presenter and a live act. While the tape operator in the middle of the room has perfect conditions for presenting a finished work in the most brilliant way (which might occasionally even include virtuoso mixing desk science rather than static adjustment to match room acoustics), the live act has to fight with situations which are far from perfect and at the same time is expected to be more lively. Given these conditions, it is no wonder that generally rough and direct live sets are more enjoyable, while the attempt to reproduce complex studio works on a stage seem more likely to fail.

A rough sounding performance simply seems to match so much more the visual information we get when watching a person behind a laptop. Even if we have no clue about their work, there is a vague idea of how much complexity a single human being can handle.

If we experience more detail and perfection we most likely will suspect we are listening to pre-prepared music. And most of the time we are right with this assumption.

We could come to the conclusion that only simple, rough and direct performances are real performances, forget about complexity and detail and next time we are invited to perform we grab a drum computer, a cheap keyboard, a microphone, and make sure we are really drunk. It might actually work very well.

But what are we going to do if this is not what we want musically?

Written in summer 2007. Mildly revised and re-formatted in December 2025.